No branching in your tests

Why eliminating ifs in your tests leads to more predictable outcomes and strategies to keep your codebase decluttered.

When it comes to software development, simplicity is king. This applies even more so when it comes to testing, whether it's unit or integration tests. But in the race of efficiency, we may stumble upon a fake optimization: using if statements in tests.

Let's understand why they're problematic and how to avoid them.

The Problem

We have a behavior we want to test, and there’s a complex setup to get to it. There might be similar edge cases that we want to cover, there might be some external dependency. The problem happens when we try to be smart and tackle uncertainty when writing a test, using conditionals.

Don’t be fooled to think that this is efficient. It’s not.

Don't overcomplicate things. The moment you introduce an if into a test, you're essentially inviting complexity. It's a sign that the test is trying to cover too much ground.

It's trading it all for a lack of clarity and a loss in focus. That’s not efficiency. Optimal tests maintain a laser-focused approach, examining one specific aspect or behavior at a time. Having such ifs blurs this focus, trying to encompass multiple scenarios in one go.

Not to mention, tests with conditional logic are much harder to maintain. They become riddled with paths that make understanding and updating them more difficult.

Yet, there is a deeper layer to this discussion, centering on the nature of the functions and methods we test. The ease or difficulty of testing a piece of code often boils down to its purity or impurity.

Pure and impure methods

Methods that are deterministic and side-effect-free, known in functional programming as pure functions, present a testing ideal.

Pure functions, by definition, always return the same output for the same input and have no side effects—meaning they don't alter any state outside themselves or rely on an external state.

This attribute not only aids in straightforward testing but also in enhancing the functions' reusability and integration into larger systems without complicating state management or dependencies.

On the other hand, impure functions, which depend on external states or cause side effects, complicate testing. Such functions can make the codebase harder to maintain by introducing dependencies and unpredictability.

While it's unrealistic to completely eliminate impure functions, their impact can be minimized by isolating them within the application.

This involves:

Separating pure and impure functions

Reducing the reliance on impure interactions

Facilitating dependency injection or fakes for testing.

The Ideal approach

Aim for single responsibility tests.

Each test should validate one and only one condition or behavior. If you find yourself needing an if, it's likely that the test can be broken down into multiple, more focused tests or you need to break down your dependencies.

Avoid branching at all costs.

A test should be a straight path, not a branching tree. Instead of one test with an if statement, create multiple scenario-specific tests. Each tailored to a specific scenario or condition. It should follow a clear Arrange-Act-Assert (AAA) pattern without deviating into different scenarios.

Speaking of AAA, I personally find no use for // Arrange, // Act, and // Assert comments in code, as long as you can separate the sections by empty lines.

Besides, by clearly naming the system under test “sut”, when calling it, and storing the result in a “result” variable, it’s clear what’s being tested (act) and that everything before represented the setup (arrange).

var result = sut.PerformTask();

I won’t dive into the assertion bit, as it is self explanatory.

Target explicitness.

Tests should explicitly state what they're checking. When you read a test, its purpose and scenario should be immediately clear without needing to deep dive and decipher the logic.

That’s why I’m not necessarily keen on having strict test naming conventions such as [Given]_[When]_[Then] or [MethodUnderTestName]_[Scenario]_[Result].

Such conventions makes reasoning about tests difficult and often require you to look further into it in order to understand what’s actually being tested.

On top of that, especially the latter version, encourages you to focus on the implementation details and on isolating the method, instead of testing a behavior. I’ve already covered why you should strive to test units of behavior here.

I prefer a more natural, English-style phrasing, to depict the business case the test fulfils, as it makes reasoning about what’s being tested much easier.

For instance, instead of a generic name, I favor using something like user_login_with_valid_credentials_authenticates_successfully or user_login_with_invalid_credentials_fails_authentication.

Refactoring tests.

Regularly review and refactor your tests. As your codebase changes, ensure your tests remain relevant, clear, and focused. All code is a liability. Test code is still code, hence no stranger to this rule.

Diving into unit testing with xUnit

Let’s dive into some concrete code examples. For the purpose of this, I’m using C# with xUnit as my favorite playground.

As mentioned, we don’t optimize the code by crafting one test with multiple branching to cover multiple scenarios, but we craft specific tests for each case:

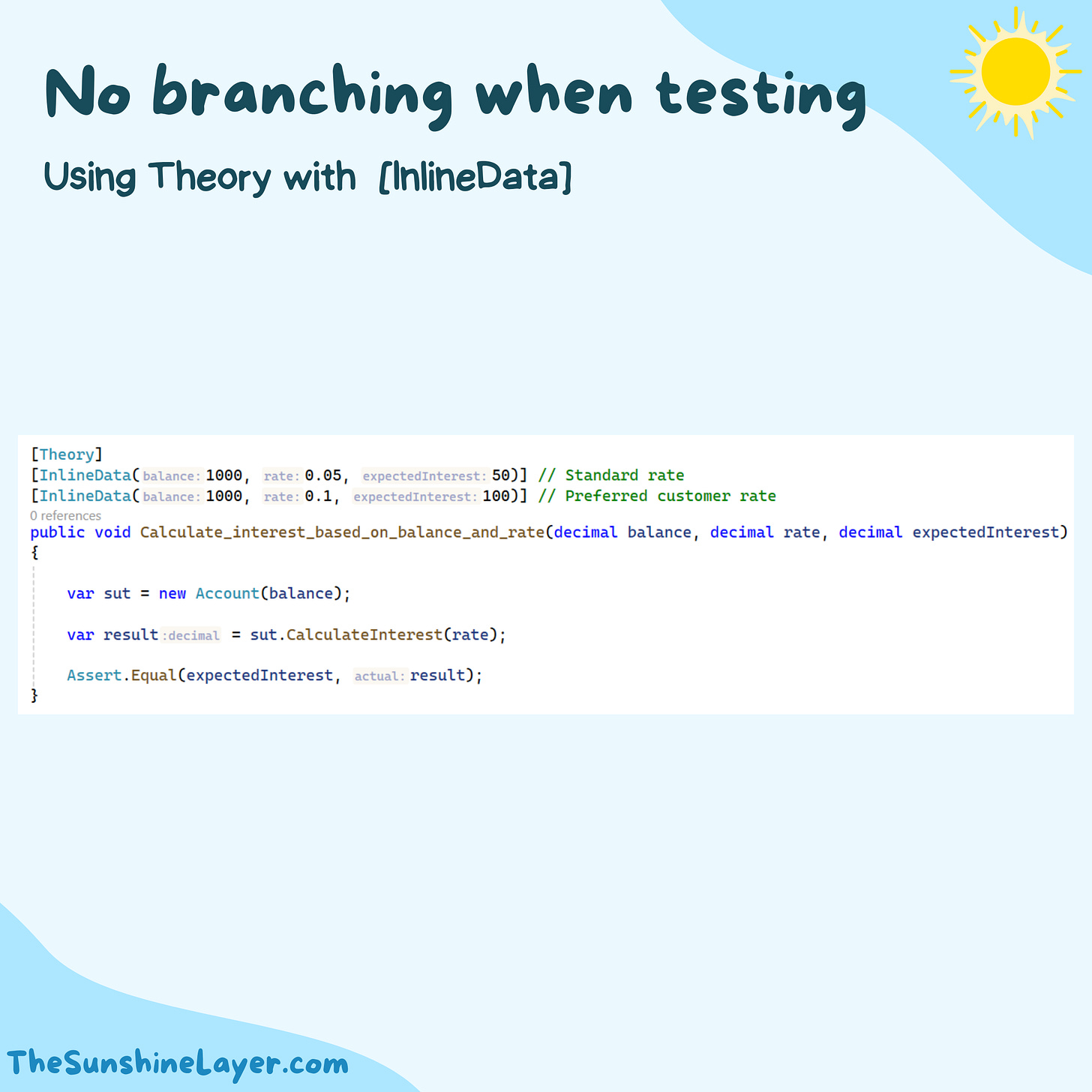

What if there are multiple edge cases to cover, all with a rather similar setup? That’s where [Theory] attribute comes to save the day.

Using xUnit's [Theory] attribute with [InlineData] allows for parameterized tests, covering multiple scenarios without resorting to conditional logic. Each data line represents a distinct, clear scenario, easy to pinpoint and debug. But the data is not so depictive, as it needs comments to make it clear. Let’s fix that.

Advanced Strategies for Complex Data

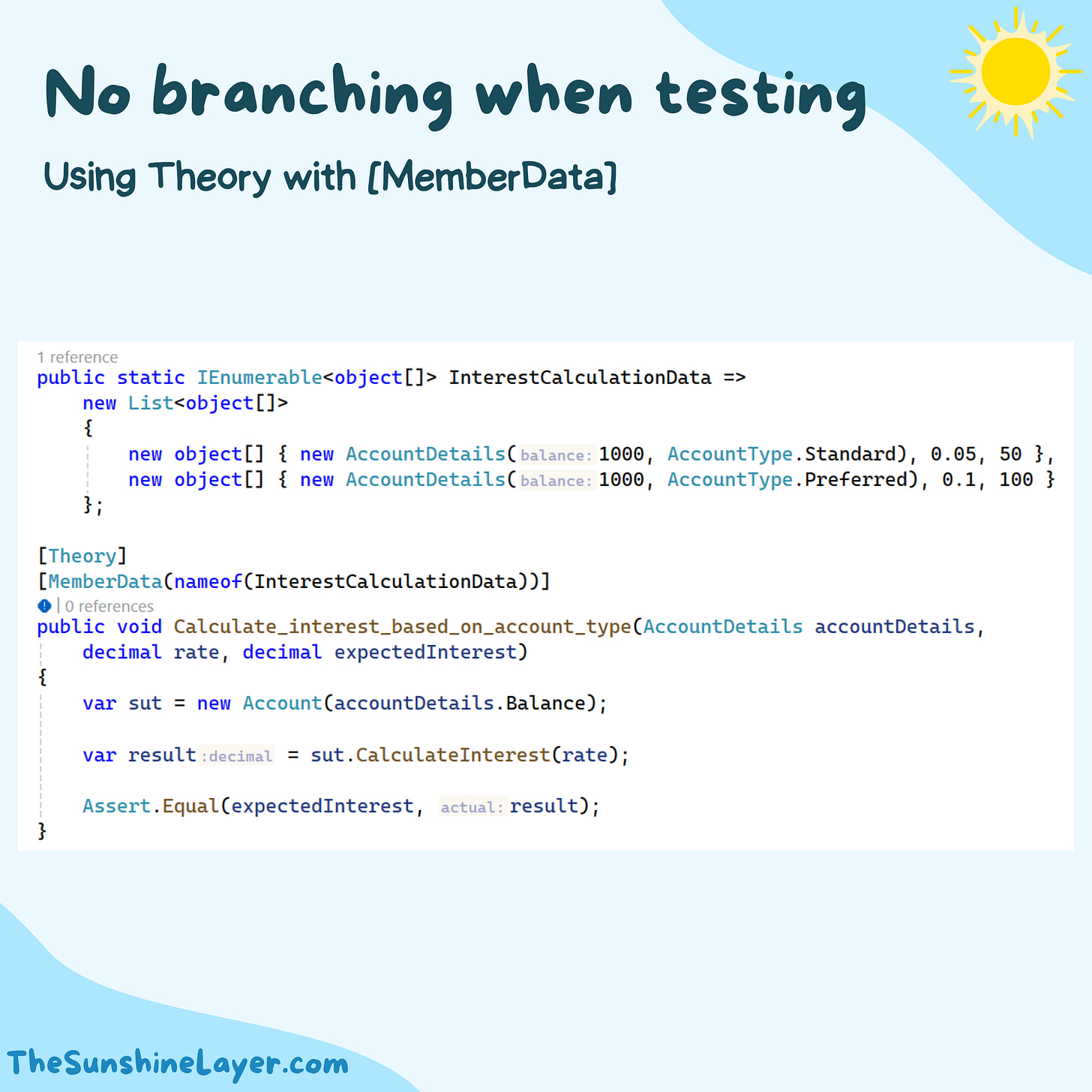

For such complex scenarios [InlineData(...)] reaches its limits. But xUnit offers two potent solutions: [MemberData(...)] and [ClassData(...)].

[MemberData(...)]

The [MemberData(...)] attribute allows you to reference a static property, field, or method within the test class or another class that returns an IEnumerable<object[]>, where each object[] contains a set of parameters for the test method.

This approach is particularly useful when your test data is too complex for inline definitions or when you want to share test data among multiple test methods.

[ClassData(...)]

For scenarios where even [MemberData(...)] might not be enough—perhaps due to complex setup that you want reused across multiple test classes or simply because you need data encapsulation, [ClassData(...)] steps in.

The [ClassData] attribute specifies a class that implements IEnumerable<object[]> to provide the data for the test method. This class must have a parameterless constructor.

Conclusion

Remember, when writing code and even more so in testing, less is often more.

Avoid the temptation to create complex, conditional tests. Strive for simplicity, clarity, and focus.

By keeping if statements out of your tests and adhering to the principle of single responsibility, your tests become more reliable, understandable, and maintainable.

Pay it forward

Here are this week’s knowledge nuggets that made me pause and think:

How Disney+ Scaled to 11 Million Users on Launch Day - by Neo Kim

STARTUP VS BIG TECH: What's the difference? - by Jade Wilson

How to Self-Manage Even if You Have a Manager - by Irina Stanescu

Treat your career as a startup - by Bogdan Veliscu

If you liked this post, share it with your friends and colleagues.

No joke, I've seen tests that have had if statements in them, it makes me cringe! How do you then know if the test does not have bugs when you add conditionals and branches 🙈

Great article, thanks for sharing!

Great tips, Helen! Having multiple execution paths in a tests is a bad sign. It is important to keep tests small and if needed use parametrized tests.

To write better unit tests, you should start by writing testable code and have a clear testing strategy. Test early and often.

Make sure your tests are simple and focused, each test should cover one aspect of the code functionality. Avoid writing overly large or overly trivial tests.